A Fast Fuzzy Search Implementation

In recent episodes of Swift Talk, we've been building a fast fuzzy search implementation. Fuzzy search is typically used to search a large list of file names by typing a few relevant characters (like Open Quickly in Xcode). For example, to find a file named Sources/Slides/ContentView.swift, you might type SSCV. The simplest version of a fuzzy search algorithm could be implemented using a regular expression: for the search string SSCV, the regular expression would be something like *S*S*C*V*. In other words, it needs to match the characters SSCV, in that order, but with any number of other characters before, after or in between the characters.

Here's another simple implementation:

extension String {

func fuzzyMatch(_ needle: String) -> Bool {

if needle.isEmpty { return true }

var remainder = needle[...]

for char in self {

if char == remainder[remainder.startIndex] {

remainder.removeFirst()

if remainder.isEmpty { return true }

}

}

return false

}

}

The code works well and is quite fast in an optimized build. We can improve performance by comparing the UTF8View of both strings instead of their characters. This might return some incorrect results if your strings contains things like combining sequences, but is typically not a problem when searching files.

Scoring

The algorithm works very well if all you want to know is whether a filename matches the search string, but in most fuzzy search implementations you're searching a list of files, and want to rank them by how well they match. Consider the following two file names, which both match string:

source/test/regression/graph.swift

^ ^ ^ ^ ^ ^

source/string.swift

^^^^^^

When we search for the word string, we'd like the first file to be ranked below the second file. Although the first file contains the characters string in the right order, they are separated by large gaps. The second file contains them without any gaps. In other words, we don't just want to match file names, we also want to rank them based on the quality of the match, like the number of gaps and gap sizes.

We can modify our algorithm to compute a score: when we match a character, we remember the position of that match. We then take the distance between the previous match and current match (the gap) and compute a gap penalty. The gap penalty could be very straightforward, such as the length of the gap, or it could be more complicated, for instance as a constant plus the square root of the length. What function you use depends on the exact use case. In addition, you might want to penalize the gap before the first match and the gap after the last match separately (or not at all).

Like before, we can make our algorithm faster by running it on

UTF8Viewinstead of onString, but keep in mind that this can change the scoring as well. For example, gap lengths between matches might differ as a character might consist of multiple bytes.

Matching a character isn't as straightforward as just calling == either. For example, when searching for the character e, we almost certainly want to match both e and E. Likewise, we might want to give bonus points when a matched character comes immediately after a separator such as /, . or _.

In the second episode of the series, we add scoring to the algorithm.

Better Scoring

Unfortunately, the scoring algorithm we described above still isn't good enough, as it doesn't calculate the optimal score. It matches characters greedily. For example, if we search for the characters string in source/string.swift, it greedily matches the first s, computes the gap penalty for ource/s and then matches tring. To compute the optimal score we need to consider two cases when we find a match: we need to compute the case in which we accept the match, and the case in which we skip the character and try to find it later in the string. Then we simply take the maximum score of both options.

We can implement that (naively) using a recursive algorithm:

extension Substring {

func fuzzyMatch2(_ needle: Substring, gap: Int?) -> Score? {

guard !needle.isEmpty else { return Score() }

guard !self.isEmpty else { return nil }

let skipScore = { self.dropFirst().fuzzyMatch2(needle, gap: gap.map { $0 + 1 }) }

if self.first == needle.first {

guard let s = dropFirst().fuzzyMatch2(needle.dropFirst(), gap: 0)

else { return nil }

var acceptScore = Score()

if let g = gap, g > 0 {

acceptScore.add(-g, reason: "Gap \(g)")

}

acceptScore.add(1, reason: "Match \(first!)")

acceptScore.add(s)

guard let skip = skipScore() else { return acceptScore }

return Swift.max(skip, acceptScore)

} else {

return skipScore()

}

}

}

Note that the algorithm above isn't very fast because it does a lot more work than it needs to. However, the quality of the results is much better than before. Next up, let's make it fast.

Fast and Correct Scoring

To make things faster, we can use dynamic programming. This means we break up our algorithm recursively in such a way that each step depends directly on the previous step, so that we can compute our result step by step. We can take inspiration from the Smith-Waterman algorithm and build a two-dimensional matrix that holds the intermediate results. We build up this matrix row by row, where each row represents a character in the needle, and each column represents a character in the filename we're looking at.

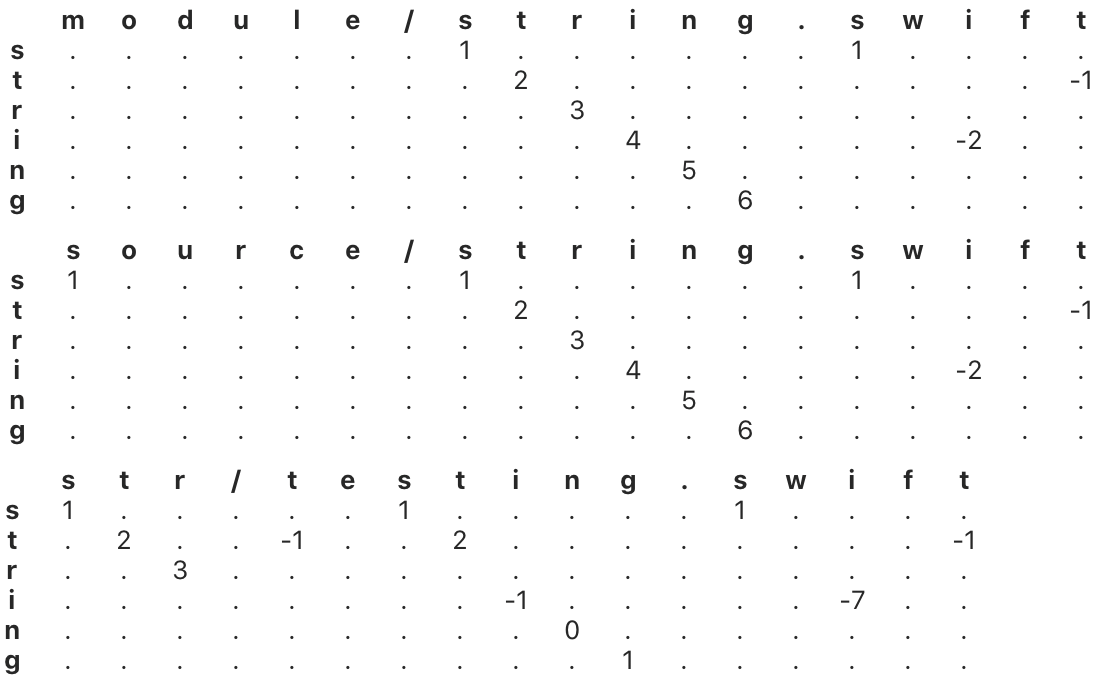

For example, when we search for string in the filename str/testing.swift, we start by matching the character s. In our current implementation, we have a zero penalty for starting gaps, and every matching character gets a score of one. That means that the first row in the matrix contains a score of one for each occurrence of s.

The second row matches a t. When see a t we can compute the score by taking the maximum score of all values in the previous row that are to the left of our t. For each of those matches we compute 1 + previousScore - gapPenalty. In this case, the final value in the second row is computed as 1 + 1 - 3.

The score for a filename is the maximum value in the bottom row. For the three filenames above, it's 6, 6 and 1, respectively.

In episode 4 of the series, we migrate from our recursive algorithm to this matrix-based version. In the sample code we also provide a GUI that visualizes the matrix, which is great for debugging. When you're playing around with scoring functions this can be very helpful.

Optimizations

The advantage of a fuzzy search is that we can get to our file very quickly without having to type many characters. This means that the algorithm has to be both correct, yielding the best possible results with few characters, and fast. Now that our algorithm is matrix-based with a few loops, we can make it fast. Our unoptimized version searches 20,000 files (the Swift code base) in about 400ms; to make it feel fast we want it to run in under 16ms, which is the time for a display pass when rendering at 60 frames per second.

There are a number of different techniques we show in the series. As a first step, we changed our algorithm to work on UTF8View instead of String, which brought the running time down to about 100ms. Because of Swift's built-in collections, this change is very simple to make. We further improve efficiency by working on arrays of UTF-8 code units (i.e. bytes) and reached 80ms.

In our algorithm, we loop over the previous row to find the maximum score for the previous character in our needle. Rather than starting at the beginning of that row, we can start at the index of the first match. Caching the index brought it down to 45ms.

Up until this point our algorithm was a generic method that worked on any Collection type as long as the Element was Equatable. Once we changed it to be a method on Array we could make use of the fact that Array has integer indices, which helps us achieve 37ms.

As a final optimization, we parallelized the search by splitting our array of file names into chunks. Matching the number of chunks to processor cores gave us the best results. This lets us search 20,000 files in about 11ms, and comfortably achieve 60 fps. The benchmarks were done while recording our screen, which seems to slow everything down by quite a bit; without screen recording we saw running times of around 6ms. We can even search the ~70,000 files of the Linux kernel code base in under 16ms.

These optimizations are documented in the fifth and sixth episode of the series.

There are probably a number of further optimizations we could attempt, some of which we discuss in the final episode, but for our purposes the algorithm is fast enough.

Conclusion

We optimized our searching algorithm quite heavily by changing it from a recursive algorithm to a dynamic programming algorithm that builds up intermediate results. This allowed us to further optimize by working on different data structures (UTF-8 bytes instead of Unicode characters), doing less work (e.g. caching some values), and doing work in parallel.

You can watch us discuss and implement these optimizations by subscribing to Swift Talk. As always, the first episode is free to watch.

References

There are many open-source implementations of similar already-existing algorithms. Here are a few we found helpful: